iPad AI

iPad AI

Following on from my previous post on things that I wanted to try on my iPad, I have spent a bit of time playing with AI art generation on the iPad.

Going into this I was not expecting to be able to do much other than to run an app or use a website that I had to pay for which would just be a front end for a stable diffusion backend.

Background

I have been spending a fair bit of time over the last year or so playing with AI art generators, especially stable diffusion. My first experience was with Midjourney when it was just a discord server than gave free credits. Most of the work I made then was pretty terrible as I nor many others really understood the idea of prompt generation.

Since then I have been using Stable Diffusion locally on my desktop both in Windows and in Linux, sadly now that I have an AMD gpu I am limited in windows to what apps I can use but I am still going strong in Arch.

While I have been doing this for a while I am certainly no expert and in fact I rarely come up with my own prompts, I take other public prompts and customise them to suit my needs. For example an image that I made for a character in a Dungeons and Dragons game that I am in:

What I use it for

AI art generation is a contentious issue at the moment and one of the more persuasive arguments against it is that it is putting artists out of work. For me personally the benefits of AI are that when there are cases where I would not commission some art work like my character portrait above then I can still get an image that ‘will do’.

Another example is most of the images in this blog, an idea I took from Xe Iaso putting generated images at the top of many of their blog posts, something I have done on a few of my articles but not all. Again something that I would not commission someone to do so no money or work has been lost by artists.

More Examples

Generating Images on the iPad

Apps

I tried many apps in the search for a working image generator. I didn’t want a frontend to some expensive service like I mentioned above, what I was really hoping for was an app that would leverage the iPad Pros M4 chip to generate the images locally. If I could download models and LORAs to use then even better.

The first app I tried was Wonder which sadly turned out to be a frontend app, nicely made but still not what I was looking for.

The second app I found was WOMBO Dream which was the same.

At this point I realised that I needed to alter my search parameters to specify that I wanted it to run natively. After finding a really interesting article on how to implement it in your own React Native app (interesting but not what I was looking for) I eventually stumbled onto Draw Things: AI Generation.

Draw Things

This app immediately gave me some very familiar controls and even prompted me to download a model that had been optimised for iPad alongside a lot of community optimised models (including an old favourite Counterfeit).

UI

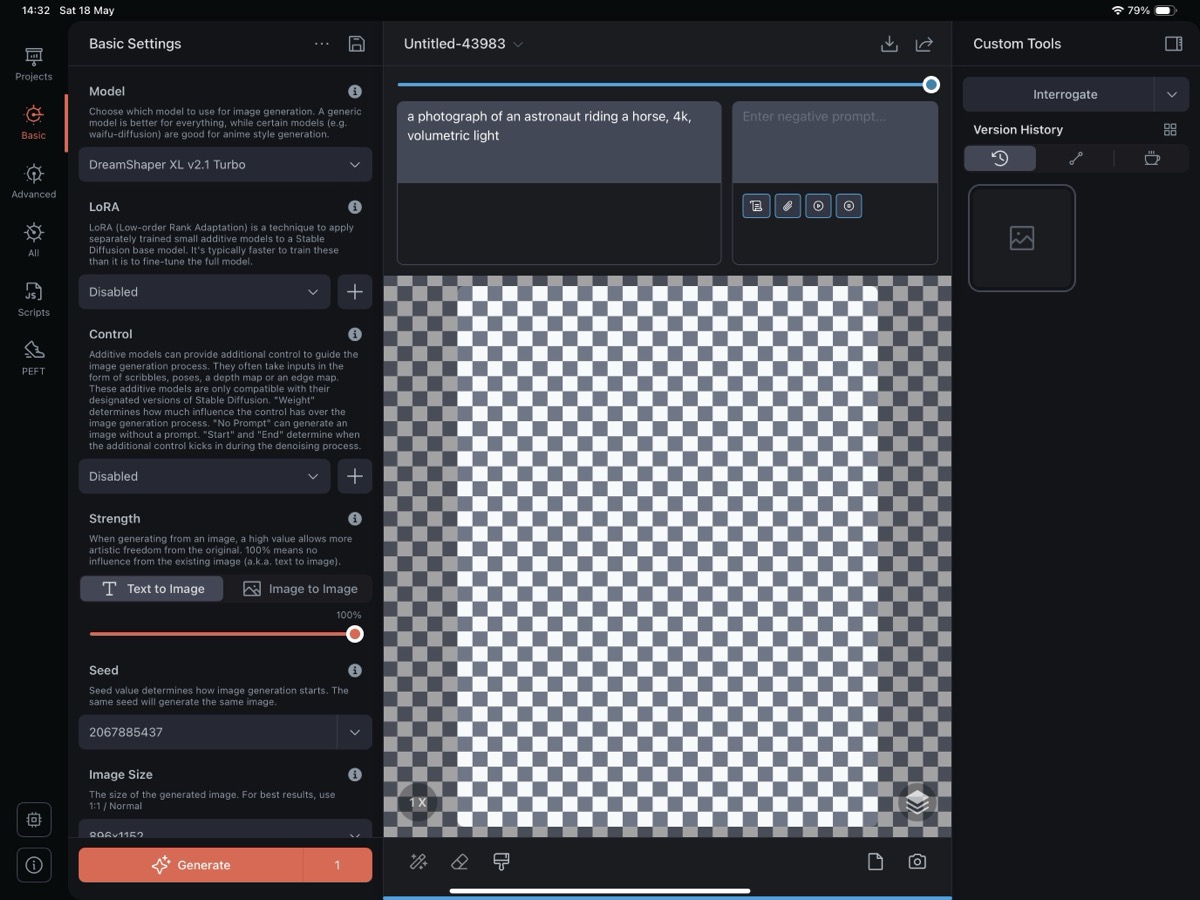

Draw Things started off with a little wizard to pick a model (I chose DreamShaperXL) to use and specify a few configuration parameters like where to save images. After doing a basic setup it presented me with the above. Anyone familiar with the Stable Diffusion web UI would be immediately familiar with many of the options presented, the positive and negative prompts, the steps, text guidance (cfg_Scale), image size, lora, upscaler, sampler, and many more.

I immediately went onto CivitAI to find the model so I could find some basic images that people had made and see if I could recreate them, this was the first result from this base image

I was pretty happy with that result so immediately started looking for one that I could recreate for a banner image to use in this post and found this base image and generated the banner image from that.

Performance

It it certainly not as fast as my desktop but given the power difference between an iPad and my PC that is not surprising, generating the banner image for this article took 2 minutes and 41 seconds. This was including an upscaler and without that generating the image took 1 minute 36 seconds.

Obviously this speed pales in comparison with some of the setups out there but for me to be able to use a model that I have downloaded previously to generate an image in 1 or 2 minutes on the go is incredible to me.

The kicker for this however is that generating images pushes the M4 cores and the memory to the limit and that means that it consumes battery like crazy, I had roughly 86% battery left on my new iPad Pro and after generating 3 or 4 images I was down to 67% in less that 15 minutes. Something I will need to keep an eye on.

The iPad also started to get warm during the generation. I kept an eye on it as much as I could without touching the screen and while it got warm there was no noticeable hotspots and the temperature never got to the point that I was worried.

The Future

I think this will be an interesting journey to go on. I like the ability to generate images on the go and will have to see what models I download as they can take up quite a lot of space. There may be an option in Draw Things to import models from a local device in which case I can load up a memory stick or use my external nvme drive to house a bunch of models and loras to use when I want. Depending on how this comes out when I publish it the idea of always having a banner image that I generate appeals to me greatly.

I will also be using this as an learning tool to improve my prompt generation and see just what I can come up with in the future, probably a lot of pictures of my DnD characters to start with but who knows the possibilities seem endless.

Gallery

Here is a gallery of things I am working on.